As technology continues to develop, we rely on machines more and more to undertake complex and time-intensive tasks in everyday life. Through modern machine learning, digital systems can now learn and execute tasks far quicker than humanly possible and with highly consistent accuracy.

With superfast learning comes superfast creativity, and artists have taken to the computer to find ways of using these systems to form new artistic processes. While machine learning may seem like another language - one only understood by computers - they aren’t as complicated as you may think. With that being said, let's look at what a GAN is and how exactly they work.

The history of GAN

General adversarial networks (GAN) are a framework for machine learning created by Ian Goodfellow and his team in 2014. The system consists of two neural networks, which are pitted against one another. Locked in a race to get one up over the other network, the process of continual refinement drives the accuracy of the output to the point that it could pass as part of the training set.

Generators and Discriminators

The two systems can be described as a generator and a discriminator. The discriminator is given the training data set and is tasked with discerning whether a work is real or fake given the references provided.

The generator, on the other hand, has the task of fooling the discriminator. Starting with a series of random outputs, the generator receives feedback from the discriminator. It makes small improvements each iteration to increase the odds that the work is guessed as real.

Due to the nature of this feedback loop, the system is in a constant process of generating new training material for itself. Simply put, every time the generator creates a work that is determined to be fake, the discriminator gets a little bit better at understanding what makes an image fake, while the generator gets a little bit better at creating outputs that will fool the discriminator.

Varun Vinod Salian: Gands Training for Art

Objective Function

The whole system relies on an objective function. In this case, the function is the percentage of times a work is guessed as correctly as real or fake. Each network strives to improve to tip this function in their favour - for the discriminator, higher percentage of correct guesses, and lower for the generator.

This guiding principle determines how each network evolves over iteration, and to better understand this process of development, we need to take a closer look at how neural networks operate.

Neural Networks

The Neural Network is the brain of the system, taking a set of inputs and producing an output. This is done via a complex network of neurons, each with a weight and threshold.

A neuron is an equation that only runs once the threshold is passed. This threshold is set based on the input data received, and once passed, generates the output. The threshold is determined by a set of weights. Each weight is assigned a value determining the importance of a certain element of the input, and once the inputs are received, all the weights are summed together. It is this total value that determines whether the neuron fires or not.

Once each neuron has determined whether it will fire or not, and the respective calculations have been made, the result is output. For the generator, the first output will be entirely random (typically a grid of noise) that is then output for feedback by the discriminator.

Once the discriminator has guessed, the feedback is then fed back into the system generator's network. Using this feedback, the system determines which parts worked well and which didn’t. Once this data has been processed, weights are adjusted to maximise the successful parts and improve the parts that didn’t work so well. This is done by adjusting the importance of certain values of the input, thereby modifying the threshold so that the tipping point provides the most successful possible output.

The discriminator operates on the same principle. However, instead of using a random input, it uses the training data as a reference. Therefore it adjusts its weights based on what constitutes a real work, and each correct guess helps guide further adjustments to determine what makes a work real or fake, increasing the percentage of correct guesses.

Novelty in GAN

By understanding how GAN’s operate, it should be no surprise that these systems are incredibly effective in emulating works based on the reference sets provided. While some GAN based artworks may use the learning process itself as a piece of art (as is the case with works by Amir Zhussupov), to generate a work that is actually new and unique in some way requires some additional systems

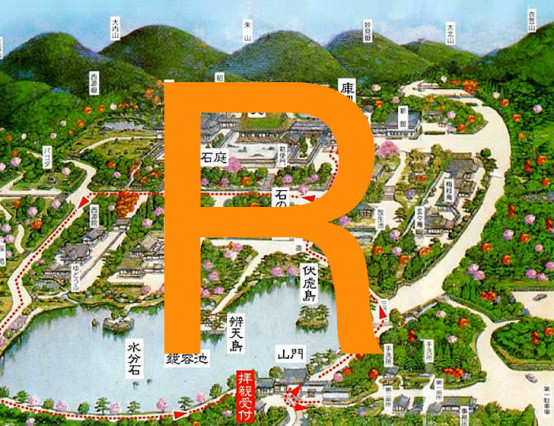

One such example of a GAN creating novel works is the GANGogh. As opposed to one dataset, the discriminator is first trained on multiple styles of painting. As a result, it is given the additional task of guessing which style the generator output is (as well as whether it is real or fake).

On the other hand, the generator is tasked with creating a work that is both guessed as real and guessed as a style that isn’t the one used to generate the work. This creates a cross-pollination of a work that is deemed to be successful in one style but has the aesthetics of another, synthesising the two.

The CAN (creative adversarial network) framework uses the style label in a much different way. In this network, the generator must create a work that is determined real in one style but has enough deviation from the selected style to confuse the style label. In this way, the aim isn’t to get as close to another style but to remain accurate to the first label (as with GANGogh). Rather the aim is to create an ambiguous style, moving further away from learned practices in favour of unique aesthetic choices.

Deep learning in art is still a relatively new practice. However, with cheaper and more advanced computers, and more awareness about programming and its creative potential, more artists are taking to computers to develop fresh new ways to push the envelope in digital art.

Hopefully, this whistle-stop tour of how GANs work, has somewhat helped to demystify the backbox of machine learning. As with beginning any new artistic pursuit, the world of creative coding is equal parts exciting and dauntingly vast. However, everybody has to start somewhere, and with the wealth of knowledge in courses, forums, and videos online, there has been no better time to start learning.

0 Comments