In short: not much that we didn’t already know, but it is still very cool.

AI has oft been claimed as the herald of the creative apocalypse: step away from your keyboards young musicians, for the machines are here and they can make better music than you can, or so the claims go. CGP Grey, a very popular Youtuber who makes brilliant video essays on all manner of topics, did a video with the suitably ominous title of “Humans Need not Apply” on AI and his prediction for the computer’s role in creating music in the future and the predictions looks pretty bleak for us human musicians. I’d highly recommend watching the whole video, but here’s an extract (starts at 11:10):

“Creativity may feel like magic but it isn’t. The brain is a complicated machine[...] but that hasn’t stopped us from trying to simulate it. [...] By the way, this music in the background you’re listening to? It was written by a bot. Her name is Emily Howell and she can write an infinite amount of new music all day for free. And people can’t tell the difference between her and human composers when put to a blind test.”

It all sounds suitably apocalyptic, but do refrain from rushing to the supermarket to stock up on cans of baked beans for your nuclear bunker, as there may be hope for humanity yet. Or rather, to assume that AI is going to be able to take over from the creative mind is to misunderstand both how creativity and machine learning works. Computers aren’t the threat we think, and the machines are not coming for our jobs, so lay down your pitchforks (or should I say tuning forks? No…. no I shouldn’t…. That would be bad and I should feel bad….).

How machines learn

I’m not going to pretend to be some kind of authority on AI, but looking into some of the basic concepts about how computers learn you can see the absurdity of assuming that they can instantly be creative in the same way that they can become proficient at completing non-creative tasks, simply because the concept of creativity is in direct opposition to how computers “learn” to do anything.

In most models of machine learning, a machine is fed raw data (often a LOT of raw data) and asked to analyse it and do something with that data. It’s output is rated, and the better the output is rated, the more heavily the computer weighs the value of doing whatever scored well in the trials – and will be more likely to do it again. This is how you can see simulations of AIs learning to walk or complete Mario levels.

But this whole process depends on one pivotal idea: positive feedback. For a machine to learn using these models it must be trying to achieve something - a goal set by its programmer. For the Mario AI, its creator weighted the distance Mario was moved to the right to be an indicator of the success of the various generations of AI. For the DeepMind AI, similarly the programmers told the computer to value the distance moved towards the goal as an indicator of the success of the program. You might be already seeing the problem with this: how do we tell a computer what “good” music sounds like? Emily Howell works by generating music that her creator, composer David Cope, then judges based on whether he likes it or not.

By encouraging and discouraging the program, Cope attempts to "teach" it to compose music more to his liking. - Cope

The problem of “good” music

In the case of Emily Howell, the “goal” that the machine is working towards is based on whether or not David reviews the music it creates positively or negatively. Literally, whether he likes it or not. But as the likes of Arnold Schoenberg or John Cage would ask you: is that the point of music? Are we just supposed to “like” it?

Whilst not everyone may follow Schoenberg or Cage’s philosophies (I know many who would put forward a convincing argument that Cage’s infamous 4’33" is not music at all) it does raise a valid point: how can we tell a machine what music should and should not be like when we can’t even decide on that ourselves?

Since the first composers of Renaissance polyphony began creating music for the church they were faced with critics who claimed that complicated counterpoint would detract from a congregation’s focus on worship. Jazz was often derided as the “Devil’s Music” for its rebellious use of harmony, and David Cameron famously critiqued the cultural value of Grime music only to be called a “donut” by Lethal Bizzle in response. Should music challenge our perceptions of society? Should it attempt to convey a narrative? Should it attempt to strive for some ineffable quality otherwise non conveyable through other media? Scholars have debated this for centuries and still cannot agree, so how do we explain that to an AI?

And even if we do set out parameters for an AI to follow, we still face problems. For a start, this entire process still requires a human composer sat at the keyboard, with an intricate understanding of compositional process and techniques in order to teach the AI how to write the music. Even then, you find a problem that once the AI has learnt to play to the style which is well received, it will continue to churn out lovely - but very similar sounding - music. A look over the Emily Howell compositions will show that much of her music sounds incredibly similar, and what music she has written follows very strict formulae: either abstract arpeggio figures that move through different harmonies at regular intervals, or strictly formulaic fugues. Perhaps in a blind test Howell’s music is indistinguishable to human composition, but once you’ve heard an example of her work it becomes increasingly easy to guess what other pieces are likely to be, based on the similarities present between the works.

The Doodle

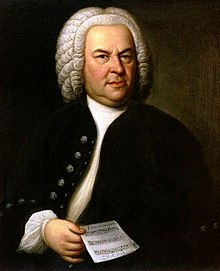

Which then leads us to the Google Doodle. What does it actually do and how does it do it? In short, the program allows a user to enter a 2 bar (4/4 meter) melody using crochet and quaver rhythms. When the user tells the program to harmonise, the algorithm - based on what it knows about Bach’s chorale music from machine learning - attempts to harmonise the melody in a manner it believes Bach might have used.

Which then leads us to the Google Doodle. What does it actually do and how does it do it? In short, the program allows a user to enter a 2 bar (4/4 meter) melody using crochet and quaver rhythms. When the user tells the program to harmonise, the algorithm - based on what it knows about Bach’s chorale music from machine learning - attempts to harmonise the melody in a manner it believes Bach might have used.

As for how exactly the Doodle does this, it uses a machine learning model called Coconet. Exactly how Coconet works is probably best left to be described by its creators but the short version is this: Coconet uses a resource of many of Bach’s chorales (4 part counterpoint writing) and erases notes from the score and the machine is tasked with calculating what the missing notes should be. The positive feedback being fed back into the machine from the results in this case is whether or not the machine produced the notes to fill in the blanks that Bach himself would have used.

Google’s Bach Doodle has some flaws. When I used it I noticed that it would sometimes break many of the rules of baroque counterpoint (parallel fifths and octaves, not resolving 7ths properly, voice leading issues), but it does undoubtedly occasionally come up with some very convincing harmonisations. For a look over some of the shortcomings of the harmonisations, I would recommend this video by Adam Neely. The inaccuracies of the program when compared to baroque counterpoint writing is not its biggest flaw. No, its biggest flaw is a failure to understand what made Bach’s music Bach: innovation and taste. This is what AI cannot account for. As any frustrated harmony student will tell you, Bach broke many of his own rules. Sometimes, he wrote entire fugues based on subjects that seem to directly defy those rules, and yet the music still works. The genius of Bach was knowing when to break the rules and why it would work.

Coconet was developed by a team at Magenta in collaboration with Google

What’s more, often particular parts of melody could just as validly and “legally” (in terms of the conventions of counterpoint writing) be harmonised in several different ways. A composer in this situation would be tasked with deciding what would be the best choice based not on a set of rules of harmony or part-leading, but instead on a subjective judgement of what would work better in the context. It is in that innately human flexibility that AI falls short of Bach.

The future?

However, this is not to say that the AI isn’t very impressive. It is. In fact, when one realises that this program is not attempting to “automate” Bach, as many seem to have interpreted it, you can really begin to appreciate what exactly the minds behind this AI were hoping to achieve. The AI managed to successfully “learn” many of the rules of harmony from the baroque period just by looking at the music of that period. Without much if any outside information, the program managed to learn complex rules and functions that form the foundation of baroque music, simply by looking at and analysing baroque music itself. That is really cool, and for that I think that computer deserves a cookie (I’ll stop now, the music pun was bad enough without tech ones too….).

Coconet and Magenta offer composers and creatives a new opportunity to create and express themselves with the assistance of AI tools. Machine learning can become a part of the creative and compositional process, and the Google Doodle is importantly not the final, or even necessarily intended, purpose for such software. The Doodle, if anything, is a demonstration of the developing power of machine learning and its potential applications within the music sphere; a power that, like all technological innovations, may seem scary at first, but will prove to help enhance what we do in the creative sphere, rather than supplant it.

Wow, what an in-depth blogpost! I'm certainly interested to see how much AI will change the face of music (as we know it) going forward!